Selecting Data to meet Business Needs

Using published Tableau data sources is the cornerstone of implementing a strong data governance strategy. How do you translate business questions into Tableau published data sources? First, identify the business needs and determine which sources of raw data meet those needs and which dimensions and measures are important to each line of business. Select data prioritized by business requirements and use cases. Document the controls, roles, and repeatable processes to make the appropriate data available to the corresponding audience.

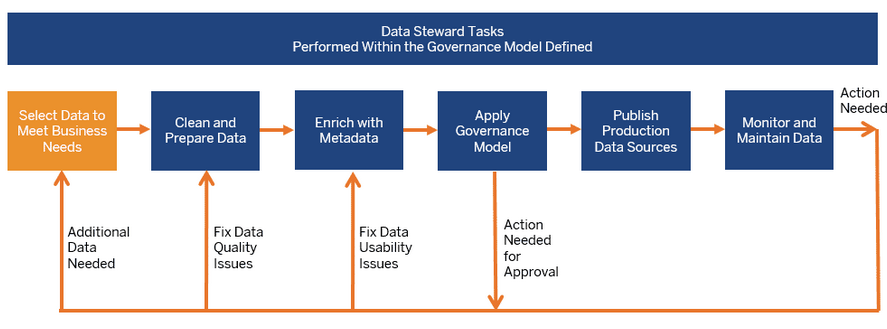

The results of this data discovery and governance plan will inform the content and scope of the Tableau published data sources, as well as the steps needed to clean and prepare them. This process of selecting data to support business needs is the first step in the data steward's workflow. We'll flesh out the details in this module.

The first step in the data steward workflow is to conduct a data survey and select data prioritized by business requirements. This task is repeated as users require additional data or business needs change.

A glance at the workflow

What questions should be asked to identify business needs and sources of data?

Before the initial deployment of Tableau software, conduct a data survey for each line of business, department, or team. The purpose of the survey is to identify sources of data that are important to each job function and how data is currently distributed and consumed. From this survey, data sources can be prioritized to determine which will be certified and available at launch.

If this is not a new deployment, use the survey to audit the data sources currently in use. From that list, identify which need additional cleaning, preparation, or enrichment with metadata. Then periodically, repeat your data source audit using the data survey. Some data sources will no longer be needed, while entirely new ones will be needed. As new data sources are identified, they can be added over time. Beyond the initial use cases, this provides a repeatable process to add new data sources as the deployment progresses.

Successful data stewards actively engage with users on their data sources needs. You can use the Data and Analytics Survey found in the Blueprint Planner, which is available for download in the Resources lesson.

Data Selection and Management

In the future state:

- What business problems/questions need to be solved/answered?

- How does your team source data (data warehouse, file exports, third-party)?

- What are the key sources of data for the team?

- How big is the data?

- How and when is it currently loaded? How fresh does it need to be? How fast are the databases?

- How often does the data change?

- How often does the business change?

Security

In the future state:

- How is data secured? What security classifications (e.g., secret, confidential, internal, public, etc.) are needed?

- Will row-level security or data personalization for each user be needed?

Distribution

In the current state:

- How is data distributed?

- How frequently is data distributed?

- What formats are used?

- Who prepares reports for distribution? Who are the recipients?

- Do users share data? If so, how do they share and what are the rules? Who are the recipients? Does the information shared differ based on the recipient, e.g., executives vs. analysts?

Consumption

In the current state:

- How is data consumed? Will this be a new or a replacement solution/report?

- Do consumers export and perform additional manipulation in the context of your team?

- How is data used in the context of the recipient's job/role?

Tips and best practices for questions

- Consider every source of data—from text files, reports distributed via email, and local database files, to the enterprise data warehouse, cloud applications, and external sources.

- Work with the data you have, then determine how to automate and optimize.

- As new lines of business, departments, or teams are added, conduct the survey to ensure you are meeting their data needs.

- Use the Tableau Blueprint planner listed in the module resources to document the results of the data survey.

How should data sources by prioritized?

Once the collated data survey information has been collected for each department and team in the organization, it is time to discover and prioritize which sources of data will be most impactful for the participating business teams by identifying initial use cases across departments.

The data sources will be used to build dashboards which have a specific audience, or group of users, who should view them and use them for analysis. Consider the audience when determining how impactful a source of data might be.

IT

- Hardware/software asset inventory

- Help desk tickets/call volume/resolution time

- Resource allocation

- Security patch compliance

Finance

- Budget planning and spending

- Accounts receivable

- Travel expenses

Marketing

- Campaign engagement

- Web engagement

- Leads

Human Resources

- Turnover rate

- Open headcount

- New hire retention

- Employee satisfaction

Sales

- Sales/quota tracking

- Pipeline coverage

- Average deal size

- Win/loss rate

Facilities and Operations

- Physical locations

- Call center volume/workload distribution

- Work request volume/resolution time

Tips and best practices for use cases

- When uncovering use cases, create a matrix that helps you identify low complexity/high impact use cases first to demonstrate quick wins.

- Select use cases and users across departments starting with the sources of data used most frequently.

- For every participating team, set the goal of one published data source and one workbook for them to use after onboarding.

Estimate the expected audience size for data and related dashboards to use later when measuring utilization. Focus on the 80% of the questions you can answer easily; don’t become impeded with edge cases. As people answer their simple questions, they will move on to more sophisticated analysis.

If using Tableau Online, consider if you can utilize any of the Dashboard Starters, which cover many common/popular use cases within various lines of businesses, such as email marketing and sales opportunities. Use the Tableau Blueprint planner listed in the module resources to document the identified use cases.

Document data governance processes

Review of governance in Tableau

As covered in the Understanding Governance and Your Role in its module in the Tableau Server and Tableau Online Basics course, both content and data are governed in Tableau.

Each type of governance involves multiples areas, as shown in the following diagram

The governance capabilities of Tableau are flexible. While there are best practices that should be followed, there is no one, right way to implement governance. This flexibility allows your organization to adopt a governance model that works best for your needs.

Tableau recommends three types of governance models:

- Centralized

- Delegated

- Self-governing

Centralized Model

- IT or another authority owns data access and produces data sources and dashboards.

- This model uses a one-to-many approach with a small number of Creators and everyone else as Viewers

- This model is often used for highly sensitive data or as a transition stage while users gain analytical skills.

Delegated Model

- This model introduces new roles (such as Data Steward and Author) that were not part of IT or a central authority. These new roles have additional access to sources of data and web authoring capabilities.

- Processes are put in place to validate, promote, and certify content.

- There is increased collaboration between IT and business users as IT shifts from a provider of reports to an enabler of analytics.

Self-Governing Model

- Ad-hoc content created by Creators and Explorers exists alongside certified content from IT or a central authority.

- The validation, promotion, and certification process is well-known and understood by users of all skill levels .

- There is a strong collaboration between IT and business users, and role boundaries are fluid as users switch from consuming to creating to promoting content.

What questions need to be asked to document the data governance standards, policies, and procedures?

For each data governance area shown in the image above, data stewards document who is responsible and what processes support each area within each model: centralized, delegated, and self-governing.

Mix and match across governance models (centralized, delegated, self-governing) by using the matrix approach to separate data and content governance and segmenting by the three models. For example, data and content governance may be centralized at the start. Then, after user training, data governance areas may be centralized, but content governance is delegated or self-governing because the data is curated. Similarly, specific areas within data and content governance can be tailored, such as delegated metadata management and centralized security and permissions, to meet your unique requirements. As business users' analytical capabilities grow, more responsibilities can be delegated over time.

Data Source Management

Data source management includes processes related to selection and distribution of data within your organization. The data source management processes that you put in place help avoid duplicate data sources.

- What are the key sources of data for your departments or teams?

- Who is the data steward or owner of the data?

- Do variants of a data set exist? If so, can they be consolidated as an authoritative source?

- If multiple data sources are consolidated, does the single data source performance or utility suffer by attempting to fulfill too many use cases at once?

- How will workbooks and data sources be shared across your company?

- What business questions need to be answered by the data source?

- What naming conventions are used for published data sources?

Data Quality

Data quality involves an assessment of data's fitness to serve its purpose in a given context. Factors such as accuracy, relevance, and freshness determine data quality.

- What processes exist for ensuring accuracy, completeness, reliability, and relevance?

- Have you developed a checklist to operationalize the process?

- Who needs to review data prior to it becoming shared and trusted?

- Is your process adaptable to business users and are they able to partner with data owners to report issues?

Data Enrichment

Data enrichment and preparation are the processes used to enhance, refine, or prepare raw data for analysis.

- Will data enrichment and preparation be centralized or self-service?

- Which organizational roles perform data enrichment and preparation?

- Which ETL tools and processes should be used to automate enrichment and/or preparation?

- What sources of data provide valuable context when combined with each other?

- How complex are the data sources to be combined?

- Will users be able to use Tableau Prep Builder and/or Tableau Desktop to combine data sets?

- Have standardized join or blend fields been established to enable users to enrich and prepare data sets?

- How will you enable self-service data preparation?

Data Security

Data security encompasses all of the protective measures applied to prevent unauthorized access to data. Security measures may vary for different types of data and levels of security.

- How do you classify different types of data according to its sensitivity?

- How does someone request access to data?

- Will you use a service account or database security to connect to data?

- What is the appropriate approach to secure data according to sensitivity classification?

- Does your data security meet legal, compliance, and regulatory requirements?

- Have you considered creating workflows that address both direct and restricted sources of data and workbooks?

Metadata Management

Metadata management refers to the policy and process creation that ensures information can be accessed, analyzed, and maintained.

- What is the process for curating data sources?

- Has the data source been sized to the analysis at hand?

- What is your organizational standard for naming conventions and field formatting?

- Does the metadata model meet all criteria for curation, including user-friendly naming conventions?

- Has the metadata checklist been defined, published, and integrated into the validation, promotion, and certification processes?

Monitoring & Management

Management and monitoring encompasses tools and processes needed to understand how data is being used and respond proactively.

- Are schedules available for the times needed for extract refreshes?

- How is raw data ingestion monitored from source systems? Did the jobs complete successfully?

- Is there a catalog of data sources? How will duplicate sources be identified?

- When are extract refreshes scheduled to run? How long do extracts run on the server? Did the refresh succeed or fail?

- Are subscription schedules available after extract refreshes have occurred?

- Are data sources being used? By whom? How does this compare with the expected audience size?

- What is the process to remove stale published data sources?

Translate business needs into Tableau data sources

What are Tableau data sources?

A Tableau data source is a file that allows you to save any data attributes you have edited for a connected source of data. A Tableau data source is a curated, user-friendly source of data that you can save and share with multiple users. It allows you to translate raw data with difficult, non-descriptive field names to a more intuitive user experience, where context and definition are built into the metadata to make it easier to use.

What are Tableau published data sources?

A published data source is one that has been published from Tableau Desktop or Tableau Prep Builder to Tableau Server/Online and that contains one or more reusable connections to data.

Tableau’s hybrid data architecture provides two modes for interacting with data, using a live query or an in-memory extract. Switching between the two is as easy as selecting the right option for your use case. In both live and extract use cases, users may connect to your existing data warehouse tables, views, and stored procedures to leverage those with no additional work.

Live Query

.tds / Tableau data source file

A .tds / Tableau data source file is a published data source that stores data connection information and any metadata changes you've made to it. "Data connection information" is all the information required to make a live connection to an external—that is, external to Tableau Server—data source. Thus, a .tds allows many users to connect to external data sources, and have the same experience, with the same metadata settings.

Extracts

Tableau creates this snapshot by storing extracted data in columns instead of rows. This columnar data structure makes reading and writing data highly efficient, while also enabling compression for faster columnar operations (such as MIN or MAX). You can set up the extract to refresh on a set schedule. What are some advantages of using Tableau data extracts?

Tableau data extracts are saved subsets of data, sized to the analysis at hand, that are optimized to offer the following advantages:

- Improved performance compared with views based on connections to the original data.

- Publishable to Tableau Server/Online for shared usage and scheduled updates: Extract refreshes can be set to incremental (adds only rows that are new) or complete (refreshes the entire extract).

- Fast to create: If you're working with large data sets, creating and working with extracts can be faster than working with the original data.

- Additional functionality, such as the ability to use Tableau functions that are not available or supported by the original data, such as computing Count Distinct.

- Offline access: Save and work with extract data locally when the original data is not available.

- Large data sets: You can create extracts having billions of rows of data.

What are embedded data sources?

Embedded data sources don't exist as separate objects that can be reused like published data sources.Because an embedded data source exists only within the workbook, it does not have its own page in Tableau Server. Only published data sources have separate and distinct pages in Tableau Server.

What are some advantages of using published data sources?

- Published data sources take advantage of Tableau Server's Data Server component, which enables reuse of those curated published data sources, in an established, centralized location.

- Other advantages of published data sources include:

- Makes data sources not only reusable, but also shareable.

- Establishes a "centralized version of the truth" for the data used in Tableau Server visualizations.

- &Allows certification of data sources to create trust and confidence.

- Increases data discovery when Tableau Catalog (a Data Management Add-on) is enabled as searching is expanded to include results based on fields, columns, databases, and tables.

- Preserves metadata management changes and updates in the Data pane (such as calcs, sets, groups, hierarchies, aliases).

- Centralized database driver management on the server, rather than locally, frees each client from having to download and install database drivers, i.e., connecting to data occurs at the server level.

- Enables control of the permissions for those published data sources.

- Uses either live data or data extracts, the latter of which can have scheduled updates to the extract.

- Increases server efficiency by pooling data connections.

- Enables web authors to use curated, published data sources to create a new workbook or add to an existing workbook (including blending of data sources).

How is security managed in Tableau?

Understanding the basics of how security is managed in Tableau helps you determine what options are available to ensure the business requirements are met. You can set up the extract to refresh on a set schedule.